PS3 & Xbox360 in the Real World

By K. Parashar - August 06, 2005

It’s practically a law of nature that with every new console generation, you’ll have armchair hardware architects putting up info that says one machine is superior to the other and will completely change the world resulting in games of such dazzling realism as to render one likely to pass out with each title. Ah, yes, and of course, the marchitecture campaigns from the various companies designed to have as divisive of effects as possible on the community. Fanboys, of course, will lap up the nonsense and hold it as the gospel truth. And the average person will simply approach it in the form of “I don’t know what any of that means, but it sure sounds like he knows what he’s saying.”

There are always pitfalls when you make comparisons based on theoretical limits of devices. The fact of the matter is that from what we know about Xbox360 and PS3 at this time, the differences aren’t that big overall. It’s going to be the little things that make all the difference in real games.

Multicore is the general direction all CPU makers are moving, as it provides superior long-term scaling than trying to push the IPC (instructions per cycle) and clock speed barriers. When you think about it, going from earlier x86 CPUs to the current crop of Athlon64s and P4s, we’ve ended up using around 18x the number of transistors in order to increase IPC by a factor of about 2-3 (that and adding 300 or so new instructions). Consoles are a little different from PCs, though, in that they rest on a single design that has to carry the platform for a very long time. And yet, both the next-gen consoles we know anything about (and most likely the third as well) have taken a multicore path. Why? For one, the added complexity of all this extra IPC-saving logic makes chip design very difficult and very involved depending on a great deal of prior experience. This is why the PC CPU market is dominated by only two chipmakers. On top of which, adding all this extra logic piece by piece over several generations of chips has created a tangled web of interacting systems that somehow manage to work. This makes for what most engineers refer to as a fragile framework; it works well, but it doesn’t fail well. Going multicore with simpler cores proves to be a cheap way to get high throughput that should hopefully be sufficient for a very long time, and at the same time, do it at low power consumption.

There’s a key distinction to be made here between “performance” and “throughput.” If any of you read Anandtech’s short-lived article that made the points of “the real-world performance of the Xenon CPU is about twice that of the 733MHz processor in the first Xbox” and that “floating point multiplies are apparently 1/3 as fast on Xenon as on a Pentium 4”, the problem here is that it’s not making the distinction between the profiles of single operations and the total throughput of the CPU. There’s no question that single threaded performance of next-gen CPUs will almost invariably be much slower than that of current PC CPUs. It’s not that the operations themselves are slow so much as there are more incurred delays between operations. As I said, we’ve got loads of transistors in our PC CPUs to keep a high IPC going. Both Xenon and PS3 have largely forgone that extra hardware and instead opted to use the extra transistor space to get more functional hardware in place. This kills single-threaded performance, but the fact that it can run MANY threads means that overall throughput can still be very high.

There’s no question however, that this takes some massaging to actually work in practice. Throughput-oriented computing is not new to the server world (hence, products like Niagara and Itanium), but it is certainly new to gaming. The worst part of it is that 360 and PS3 take entirely different approaches to the problem. Xbox360 takes the SMP route, while PS3 takes a sort of master-slave route. Xbox360 is better suited to large-scale threading where most of the work per thread is on a relatively even keel and your main concern is synchronization. PS3 is seemingly better suited to micro-thread type tasks where the operations themselves are small, but there are many of them that are completely independent.

There’s no real empirical basis within gaming to say that one method is easier on developers than the other. It’s really something that can only be shown after the fact. This is primarily because, if for no other reason, the toolset will define the bulk of development. In the case of 360, there’s a certain advantage to SMP in that the performance profile of any given thread is the same and is always predictable regardless of the core it’s running on. With CELL, the code has to separated between SPE tasks and PPE tasks. The adage that game code is around 80% “general purpose” and 20% floating point is certainly accurate if you’re talking about code volume. The problem is that game code is never the big time consumer in a game. It’s the engine code where we’re always reinventing the wheel over and over to get just a few more polygons down the pipe or to make sure we can get that consistent framerate. That end of things is certainly floating-point heavy. More importantly, the game code is usually not something that multi-threads well because games are not really parallel. Every step goes in a very specific order because of the information prepared after each step. XeCPU would certainly have an advantage in an instance where one box may be used in a LANparty as a server for a multiplayer game.

When I see things like Major Nelson’s little post-E3 counterargument in favor of Xbox360, I can’t help but think that he really chooses all the wrong arguments so that the average Joe and fanboys can understand, rather than actually making valid points.

Before I get into the finer points, I’d rather just start off pointing fingers at the dumbest of all the arguments -- the purported memory bandwidth advantage. The comparison claims an advantage for Xbox360’s 278.4 GB/sec of memory bandwidth to PS3’s 48 GB/sec. Of course, the stupidity of this statement is that the 48 GB/sec of PS3’s bandwidth is all point-to-point bandwidth, whereas 256 out of Xenon’s 278.4 GB/sec is internal to a die. If I wanted to include PS3’s die-internal bandwidth, I could include the fact that CELL’s EIB ring allows up to 96 bytes per cycle to be sent between the various SPEs and the PPE, which at a clock speed of 3.2 GHz, amounts to an extra 307.2 GB/sec. Kind of puts the argument to rest, doesn’t it? Hell, if I wanted to be really stupid, why not include the bandwidth between the SPEs and their own SRAMs (230.4 GB/sec per SPE)? Either way, neither comparison is at all valid. The GPU of Xbox360 has a daughter die containing 10 MB of DRAM plus a little bit of extra logic. The bandwidth between this logic and the DRAM is 256 GB/sec. However, this die cannot communicate with other devices at this rate or anywhere near it. The bandwidth between this daughter die and the functional part of the GPU is only 32 GB/sec. The bandwidth between the GPU and any other device is at most 22.4 GB/sec. No matter how fast the eDRAM is, it will never be able to transfer to and from other devices faster than that 22.4 GB/sec. Comparing the sum of chip-internal bandwidth and point-to-point bandwidth of one machine to point-to-point bandwidth only of another machine is fundamentally stupid. It’s not so much apples-to-oranges; it’s a pile of apples compared to a mixed fruit basket that happens to contain a few apples among other things.

Measuring point-to-point memory bandwidth only is actually more meaningful anyway since it’s this bandwidth that has always been a limiting factor for performance. Bandwidth within the silicon area of a single chip is rarely a problem until a chip becomes too complex for its own good. By that measure, Xbox360 has at most 22.4 GB/sec to PS3’s 48 GB/sec. Not so good-looking numbers there for 360. Of course, we could confine exclusively to the CPU and point that 360 has 21.6 GB/sec of memory bandwidth to the CPU vs. PS3’s 25.6 GB/sec. That is actually not a very big difference in practice. The fact of the matter is that neither CPU will really be all that happy with the level of memory bandwidth it may get. In other cases of multi-core processors being limited by bandwidth, the typical workaround is really quite simple -- make the cache large. Neither console really does this, most likely due to cost of production. A 1 MB L2 cache like in Xbox360 may only have a miss rate of around 5%, but that 5% can translate to an average CPI cost of some 26 cycles. In layman’s terms... that’s extremely bad. It means that if you’re not careful, single-threaded performance on PS3 or Xenon could be outpaced by that of a Dreamcast.

Now a valid argument using this 256 GB/sec of bandwidth on the daughter die would have been in regards to points about “virtually free” antialiasing or the fact that it can potentially improve the relative efficiency of the GPU. The fast eDRAM on the daughter die is theoretically quite effective in these matters, but these are things that are impossible to prove without actual field testing. Instead, the comparison leans towards comparing the wrong numbers against each other because the layman would see a numerical comparison as completely objective. By the way, I heard at one point that 772.6 is greater than both 278.4 and 48 combined. That proves that Nintendo Revolution will be the most powerful console ever! How? I don’t know; I just made it up, but you can’t deny the numbers, right?!

The supposed general-purpose computing advantage of 360 is also an erroneous figure because they base it only on number of so-called GP cores and not the actual execution profiles of the CPUs. It’s certainly true that out of CELL’s theoretical 217.6 GFLOPS, 179.2 of those are from vector ops on the SPEs. Additionally, another 25.6 GFLOPS are extracted from the vector unit on the PPE. Xbox360 is not exempt from this point, either -- 76.8 of its 115.2 GFLOPS come from vector ops. However, all these pipes can handle both integer and floating point, and there’s nothing really inherently preventing those vector units from performing general purpose code. They’re just not very good at it. Moreover, as bad as the SPEs are at general purpose code, referring to them as DSPs is nonsense and can be called nothing short of childish on MS’ part. If we were to hypothetically put all the vector units of both CPUs running entirely scalar float/int code, we’d end up with the following numbers -- XeCPU : 48 GFLOPS, CELL : 64 GFLOPS. Not looking so good for Xbox360. Either way, though, numbers like that aren’t very realistic because no game programmer in the world would actually WANT to use the vector units in a scalar fashion -- mainly because that raw throughput difference is so huge, and because vector units are so sensitive to instruction latency.

Real world performance is not going to be dictated however, by how often you can use vector ops. What gets lost in the whole “general purpose” argument is what people are actually referring to when they speak of “general purpose computing.” To me, the bulk of “general purpose” code is going to induce the risk of hitting memory. Chasing pointers, branching, shuffling data around, state checking and synchronization, passing messages... all of which is very important to game code no matter what some uninitiated might think. These are not problems exclusive to game logic or AI, either. Actually, with CPUs as fast as they are these days, this particular factor is the single biggest limiting factor to performance. CPUs these days are so fast that the speed of light and the physical distances that signals have to be routed is actually a limiting factor. And with the very simplistic designs of each core in both XeCPU and CELL, that limiting factor will show itself more clearly than on a PC CPU. It’s as if we’ve got all this computational power that utterly puts a PC to shame, but we can’t actually move data around (assuming we want things to run at a decent speed).

This is yet another thing that really can’t be shown without actual in-the-field results. I haven’t seen any data on this matter regarding PS3 yet, but several people, even on public forums, have mentioned the 500+ cycle stalls for cache misses on Xbox360. Getting the frequency of that occurrence to a minimum is going to involve a lot of changes to the way we do things currently. There will probably be a lot of cases where it’s cheaper to recalculate things every time you need them instead of storing the values off in memory. Simple branches where a particular calculation is chosen may be better handled by simply doing all the calculations and performing a conditional move. So far though, things in the memory arena look worse for Xbox360 simply because it uses a high-latency type of memory and the CPU is farther away from memory. While GPUs don’t really care that much about latency (which is why there’s no harm in using GDDR-3 for a GPU), it makes a big difference to a CPU. Data dating back to 2003 regarding XDR DRAMs at 250 MHz suggested latencies getting as high ~310 CPU clock cycles (for a 3.2 GHz CPU, not including cache latency). Since PS3 uses 400 MHz DRAMs (3.2 GHz signaling rate), it will probably fare a little better. Given that, the average cache miss on PS3 will probably have about the same cost as a cache miss on a P4, except that no fraction of that latency will be hidden, and they’re likely to happen more often because we won’t have the benefit of an aggressive auto-prefetch.

As much as a core processing difference might show up in the long run, there’s probably nowhere near as much difference between the two machines graphically. You’ve got the 500 MHz Xenos vs. the 550 MHz RSX. MS makes a big deal about Xenos’ unified shader architecture and its huge set of 48 pipelines that can serve either vertex or pixel shading operations. One of the very nice things about this is that it can dynamically balance well between pixel and vertex shading according to the respective demands at a given time. How well it does this is uncertain without testing in practice, but there is no question that good load balancing is no trivial problem. Major Nelson’s graphs for the 360 and PS3 GPUs shows the two being nearly even but slightly in favor of Xenos. There are errors here, but in this particular case, the errors are due largely to the fact that detailed info on RSX was not available at the time, so people had to make guesses as to what Sony was referring to when they said things like “136 shader ops per cycle.” With what we know now, it seems that those 136 ops were purely computational. However, it’s not entirely clear yet whether things like the texture sampling or flow control will actually take up ALU slots. Given that the Sony press event at E3 mentions both 136 shader ops per cycle and 100 billion shader ops per second (the two numbers don’t agree at 550 MHz), that suggests that there’s at least 1 other component that gets its own issue lines separate from the ALU.

In general, it seems that if the usage of the render pipeline tilts the load extremely to one direction or the other, Xenos will have a distinct advantage. It also depends on how strongly weighted the complexity of shaders is. RSX shows a clear bias towards complex pixel shaders, whereas its vertex pipes are largely no different (spec-wise) from those of Xenos. On paper alone, it looks like Xenos has an advantage in the expressive power of its shaders, largely attributable to the unified shader model. However, because the RSX allows a potential for so many simultaneous independent ops in its pixel shader units (2x vector4 + 2x scalar + 1 normalize), its performance on an individual pixel shader can potentially be quite high, which means that more shader power could possibly be used in practice. Of course, in practice, the chances to use wide co-issues may not be that frequent, and they will most likely hit in the earliest parts of a pixel shader where a lot of data is set up for further calculations. Either way, the focus of both units is obvious, it’s less about raw fillrate and processing huge polycounts and instead more about massive shader power -- i.e. smarter polygons instead of more polygons. On the whole, the GPUs are fairly even based on what we know at this time.

The fact that the real world difference in graphics may not be that big means that to a publisher, it makes a lot of sense to release next-gen games on multiple platforms since there won’t be many major reasons for a consumer to choose one SKU of a title over another beyond just platform loyalty. Cost of development will certainly go up, and releasing on multiple platforms decreases risk without a huge added development cost. In this aspect, Xbox360 has one advantage. If one were to look at the multi-core roadmaps for PC chipmakers like Intel and AMD, the next few years propose SMP-type designs, whereas CELL-like designs dominate the roadmap years later. For most anyone who has done any kind of multithreaded programming, SMP is a known quantity, which is probably the basis of MS’ argument that 360 is easier to program for. Referring to CELL as a “revolutionary concept that will change everything” is another way of saying there will be a learning curve. How gentle that curve is will be a matter of how good the development tools are.

Compared to the average toolset you’d otherwise see in game development, Microsoft’s developer tools have always been very good... when they work. With a few exceptions, they really don’t fail as often as the prior statement may make it sound, but like Murphy’s Law states, they necessarily fail when it is most inopportune and/or of a condition to maximize the levels of annoyance. I can’t even begin to count how many times an error that Visual Studio gives me was fixed by rebooting the machine. Sony’s tools for the PS2 are rather insipidly lacking. In that arena, I can’t even begin to count how often the compiler itself seg faults. For the first Playstation, it was also severely lacking in the beginning, but the system architecture was fairly straightforward for a lot of developers at the time. There will be hell to pay if Sony doesn’t provide decent tools this time around, though, because even more than the architecture, the poor tools and documentation was a highly reviled aspect by PS2 developers. Fortunately, IBM and nVidia have their hands in that pile, so it won’t entirely be a lost cause. If there’s anything IBM can do well, quite possibly better than Microsoft, it’s making a good compiler. After all, they make the hardware as well, so who would understand the specific challenges better? Actually, chances are good that IBM had their hands in the Xbox360 compiler as well, if for no other reason than the fact that Microsoft would have no reason not to use IBM’s input.

Sony’s efforts to provide good tools have always been... ummmm... well, uhhh.... How does one put this delicately? Mmmmmm... nope, can’t do it. Well, that aside, IBM generally makes good compilers, and assuming that Sony at least used IBM’s input, that probably at least says we won’t end up with compilers that produce complete trash. The only thing anybody outside the PS3 devnet really hears about for dev tools happens to be COLLADA. There’s usually a lot of confusion here because people keep comparing COLLADA to XNA, when they’re really not similar. XNA is a tools package mainly aimed at programmers, albeit an incomplete one at this stage. The promised miracle package doesn’t really exist yet, nor does Microsoft claim it’s complete. COLLADA is a file format. Its primary aim is really in unifying content formats to ease the art pipeline. For me as a programmer, XNA is what would concern me more, but for those in the studios who have to worry about cash flow and time management, COLLADA has a fairly important stance as well. Content creation and art pipeline are the primary driving cost factors in game development, because as much as we programmers might be loathe to admit it, the artists are the most important people on the team.

It’s doubtful whether COLLADA would do much to help improve the rate and cost of actual content creation, but it does mean that because of unified formats for storage, a common importer would work regardless of the source. It also means that studios may not have to worry about the packages that artists prefer, which in turn opens up possibilities for outsourcing. For in-house art, though, most, if not all, studios already have single-package art pipelines (exclusive MAX or exclusively Maya, etc.)

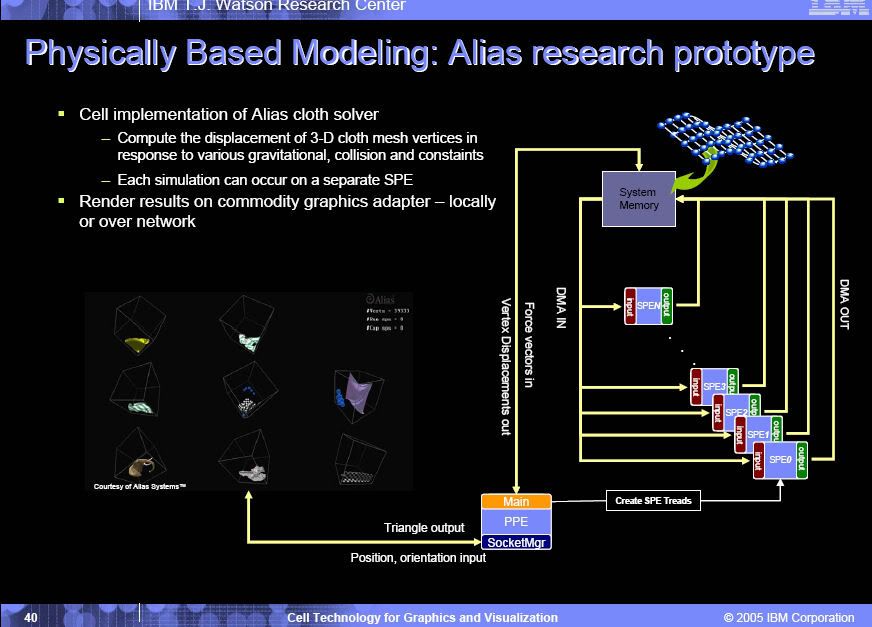

Personally, I’ve yet to see with my own eyes what actually comes with PS3 devkits, but most of the talk going around seems to suggest that it’s fairly decent. It could be considered a veritable 180 (no pun intended) from the PS2 tools, which were more or less, a complete farce. The general programming model for the PS3 rests greatly on automation of resource management, so the developer simply has to request any arbitrarily available SPE and deliver apulets to it should they find the need to use the SPEs. This, in reality, is actually easier than the Xbox 360’s SMP model which requires us to explicitly handle these matters of resource allocation ourselves. However, this can be a double-edged sword in that there’s no immediate guarantee of the quality of these libraries that Sony provides. Moreover, it may be very poor in early games, but generations of SDK updates may improve that aspect over time. In either case, the necessity of having to handle resources on your own with 360 is largely unavoidable because the needs for threading is likely to be completely different from one game to another. The fact that PS3’s CELL has a central “master” processor which can issue to several other vector processors means that having an automated resource allocation scheme is perfectly feasible because there is a known entry point for all code and the CPU is well-suited to short, fixed-function, repetitive mini-tasks. Of course, having the resources is still different from knowing precisely what to do with them, which is something that is a problem for both consoles. This is why a lot of PS3 developers speak of their demos running only on the PPE. while most people would think this is really impressive in that they’re producing the results they are with such minimal hardware utilization, it really says that the learning curve is very steep. The biggest advantage launch titles for PS3 will have is the fact that developers will have worked with the CELL much longer than 360 launch developers will have worked with the XeCPU.

As far as 360 is concerned, the tools that have been hyped beyond words is the indomitable XNA. Many of the claims about what it may provide are possibly true, although XNA is still vaporware for the moment. My main problem with XNA is that its goals are a bit misguided. If you remember the campaign that appeared about a year ago, everything seemed to suggest that XNA would decrease the amount of time spent on tech development and leave more time for the actual game logic and game content to be developed. Typically, unless you’re in a position like that of Half-Life 2 or Doom3, where brand-new tech was developed, tech development goes on in parallel with game development, and a game that is getting close to release gets branched off so that the only tech changes that go in will be bugfixes, many of which might be dirty hacks that are specific to that title. On top of that, the number of people in a given studio who are working on the core technology of an engine is pretty small. So even if you do cut down on the time of tech development, how much money in the cost of the development of a title has really been saved? Not too much, I’d imagine.

It’s one thing to argue that one of the consoles will be easier to program on than the other, but all too often you see ‘schmoozed’ developer comments saying that Xbox360 is so easy or PS3 is so easy. Any comments along those lines can be taken as boldfaced lies. The fact of the matter is that developers will necessarily have to optimize specifically for these new platforms. Multi-core, in-order, small caches, all adds up to a load of headaches no matter who it is. It also means that there may, at least at some point if not at launch, be a sizeable difference in visuals and gameplay between the exclusive titles and the multi-platform titles. Looking through a lot of the complaints developers have had about the next-gen hardware, they all point to one fundamental outlook. This next-gen is going to be one that separates the men from the boys.

tens o link original da fonte?

tens o link original da fonte?